How to report a security issue in an open source project

So you’ve found a security issue in an open source project – or maybe just a weird problem that you think might be a security problem. What should you do next?

How to report a security issue in an open source project

- Make a reasonable effort to report the issue privately to the maintainer(s).

- Give them a reasonable amount of time to fix the issue in private.

- If this fails, you may disclose the issue publicly.

This general outline is broadly agreed-upon as good practice within the security and open source communities. Deviating from it is borderline irresponsible and anti-social, and certainly not good open source community behavior.

That’s it, that’s the post, you can stop reading now if this makes good enough sense to you. Of course, the devil’s in the details – “reasonable” is doing a lot of heavy lifting up there – so I’ll attempt to address some of those details in the form of an anticipated Q&A. If you have other questions I haven’t answered, feel free to get in touch and I’ll try to add them.

Q&A

Why has this become the generally-accepted standard?

Fundamentally, we’re trying to optimize for safety. To understand how this procedure does this, let’s look at two different ways security disclosure might fail:

Say you report the issue but the project never fixes it and you never disclose publicly. Now there’s a security issue that affects all users of that software, and it might some day be exploited. Sure, the issue is secret – but only for now. Eventually, someone else might discover it, and they might choose to exploit it.

Say, on the other hand, you make no attempt to have the issue fixed privately, and instead immediately disclose it publicly. This in effect starts a race between attackers and defenders. Now that it’s public, attackers will try to weaponize the exploit as quickly as possible; meanwhile, defenders scramble to update or replace vulnerable software. Without time to develop a fix, maintainers might not be able to make one quickly – or might release a fix that’s buggy or incomplete.

This procedure, then, is a compromise. It gives maintainers sufficient time to fix issues privately to avoid this mad scramble, but puts a limit on the amount of time an issue can go unfixed. Giving the project time to make sure proper, well-tested updates are available means that it’s as easy as possible for defenders to update their systems, and allowing public disclosure after a certain period of time without a fix serves as a backstop to protect users.

How should I go about reporting an issue privately?

Ideally, the project will have a guide to reporting security issues (here’s Django’s as an example) – that guide should have contact info, typically an email address like [email protected].

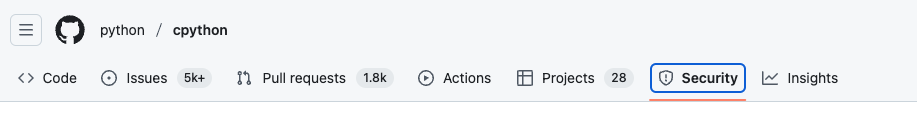

Projects hosted on GitHub might have a “Security” tab:

Clicking that tab will show you security reporting information, and for some projects allow reporting security issues through GitHub’s security-reporting feature (which is private).

If you can’t find a security page, or security-specific contact info, but can find a maintainer’s contact info, or if there’s a general contact form/email for the project as a whole, it’s ok to use that.

What’s not OK: opening a public issue on their bug tracker; starting a public forum post; posting about the issue to a public email list; blogging about the issue; tweeting/tooting/skeeting/whatever about it publicly; and so forth. At least, not without exhausting other options, see below.

How much effort is “reasonable” before I conclude I can’t find private contact info?

There’s no strongly agreed-upon time limit here, but I wouldn’t expect to spend more than ten or fifteen minutes, at most, looking for this info. The loose “contract” here is that the maintainers will spend some effort making security reporting procedures easy and obvious, and you’ll follow those procedures. If they haven’t put in the effort to provide a private channel, you’re not on the hook to go to extreme lengths to find it.

What’s “a reasonable amount of time”?

Once again there are no hard and fast rules; reasonable people may differ. That being said, I think there are some rough timelines that nearly everyone would consider reasonable:

- You should expect to hear back after your initial report within about a week. If you haven’t, you could try again a couple-three times. If you haven’t heard back at all within maybe two or three weeks, I think it’s safe to conclude nobody’s home and you can move on to considering a public disclosure.

- You should expect the issue to be fixed within no more than three months – or to get clear and direct communication from the project that it’ll take longer and with a clear timeline. If the issue hasn’t been fixed in three months, and there’s no communication from the project indicating that they’re working on it, you can move on to considering public disclosure.

Three months might seem like a very long time, but security issues can be tricky. Projects usually need to create patches against multiple supported versions (a Django security fix, for example, can involve patches against as many as four different versions of Django), write up security disclosures, get a CVE issued, coordinate disclosures with upstream packagers (e.g. Linux distributions), and more. And they need to do all of this with a limited set of staff/volunteers: since this is happening in private, projects can’t call on the resources of the broader public community.

So, three months is a bit of a rough established standard. It’s long enough to allow for all of these efforts, while still being not an absurdly long time to leave an issue open. Still, this is a place where reasonable people in the security/open source communities differ; some call for longer, some for shorter. So, if you think a different time window makes more sense for you, or for the specific issue you’re dealing with, I think it’s totally reasonable to ask the project for a different time window. I’d say that there’s broad consensus on somewhere between one and six months being reasonable. As long as you’re somewhere in there, I think you’re being reasonable.

What if this all falls apart?

Usually, this process works. You report an issue privately, the project fixes and discloses it on a coordinated timeline. But sometimes it doesn’t: you can’t contact the maintainers, or they blow you off, or go silent and never release a fix, etc. I think it’s polite to try a few times – especially if you’ve initially heard back, but then the three-month window comes and goes without a fix. Open source projects aren’t always great about communication, and sometimes getting back to you about a delay might have just fallen through the cracks.

But say you’ve tried all that, you’ve made an honest effort, but it’s just not worked. After a certain point, once you’ve made a good faith effort to get the issue fixed privately, the balance of public safety tips, and a public disclosure is warranted.

I recommend making this a blog post, or a forum post, something like that – something with a permanent and stable URL. A post to social media is a bit more ephemeral, and hard to link to; you want something people can easily find and pass around.

Other references/guides/further reading:

Some further reading and other related resources you might find useful

- Django’s guide to reporting and security processes. I don’t know that we do it especially better or worse than most open source projects, so this is less of a brag and more of a representative example of what you can expect these things to look like.

- A guide for maintainers from Google’s open source office – a reasonable enough starting point for maintainers who don’t have a process and want one.

- Another guide for maintainers from GitHub. This covers some of the GitHub-specific tools you might choose to use. Also see GitHub’s documentation of their security advisory features. I’m not overly thrilled with GitHub’s tooling here – it’s fine, just kinda minimal. It’s probably a good choice if you’re a small project already using GitHub heavily for everything else, but might not be the best choice for larger projects.

- Google Project Zero FAQ. Project Zero is a vulnerability research team at Google that looks for vulnerabilities in non-Google software. This document explains their disclosure policies, and has a nuanced discussion of this three-month window and how it works in practice.

What’d I miss?

If you have other questions I haven’t answered, feel free to get in touch and I’ll try to add them.